Hi All,

First and foremost, congratulations to Patrick!

Second, I realize that my in-class presentation was a little short, so I want to clarify some things about my project proposal here. Below is an excerpt from my proposal that I hope will answer some questions. Anyone please feel free to ask more questions and let me know if you would like me to share the entire paper. Thank you all for an amazing semester and I’m looking forward to working with you in the future!

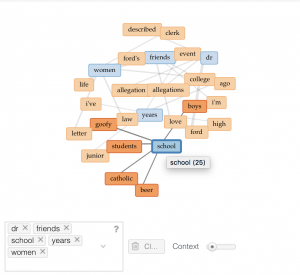

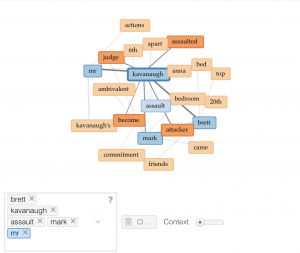

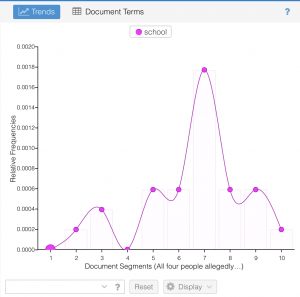

“I propose that we create two digital tools that can be used as an alternative to a typical digital management software such as Luna Imaging, which is often used by libraries and archives. The digital tools that I am proposing will improve the collection search process and increase social media presence. Ideally these tools will reach a broad audience and facilitate traffic to archives and cultural heritage institutions, whose community is normally limited to scholars and researchers. These tools will make searching collections fun, smooth, and easy in the form of a mobile application (known as an app), and a “Publish to Social Media” button on the upload page on the back end of Luna. Throughout this proposal I will be using the terms “front end” and “back end”. Front end refers to the presentation layer of a software, website or app, and back end refers to the data access layer, usually where code is written [1].

I’m proposing these very different tools as one project because they are in fact intertwined. To reach the general population, who have a fairly untapped interest in history, we must make reaching out to them through social media, as well as their experience searching archive websites easier.

As it stands there are problems with searching archives’ digital collections. If the digital archivist uploading material is not proficient in web design, or if the archive’s collections are unorganized on the back end, the front end of the archive’s website can be tricky to navigate. I hope to tackle this problem with the app by creating less freedom on the back end, which will create a more concise and easy to understand digital gallery.

In addition to creating an app with a digital gallery, I would like to propose partnering with the New York City Municipal Archives (NYCMA) to create an interactive map of New York City. This map will showcase images of every building with its associated address from the 1940’s, courtesy of the New York City Municipal Archives recently completed tax photo project. The map will also showcase any digitized images associated with an address or area in New York City, such as a crime scene photo from the year 1927 at 125 Mulberry St.

Creating the mobile app alone would tackle the learning curve needed to view different archives’ digital collections, which many researchers and scholars are already familiar with. It is important to reach out to the greater population, many of whom are unaware of the fascinating resources that with the app could be right at their fingertips.

Reaching this larger population could be done right from the source with a “Publish to Social Media” option on the upload page of Luna. This would make it easier for NYCMA to reach out to its potential patrons in such a way that keeps up with the fast-paced ways of the internet, and without the need to publish separately on multiple social media platforms.”